EU AI Act Article 14: Legal Obligations for High-Risk AI Systems Explained

Main takeaway: Article 14 of the EU AI Act makes meaningful human oversight a legal requirement for all high-risk AI systems. If an organisation deploys or provides such systems, it must ensure trained humans can understand, monitor, override and, if necessary, shut them down safely.

What Is Article 14 of the EU AI Act?

Article 14 is the core provision on human oversight for high-risk AI systems. It requires that these systems be designed, developed and deployed in a way that allows natural persons (humans) to effectively oversee them throughout their use.

The goal is not to slow down innovation, but to ensure that AI does not create unacceptable risks to:

- Health

- Safety

- Fundamental rights (e.g. privacy, non-discrimination, due process)

In other words: high-risk AI can be powerful, but it cannot be left on autopilot.

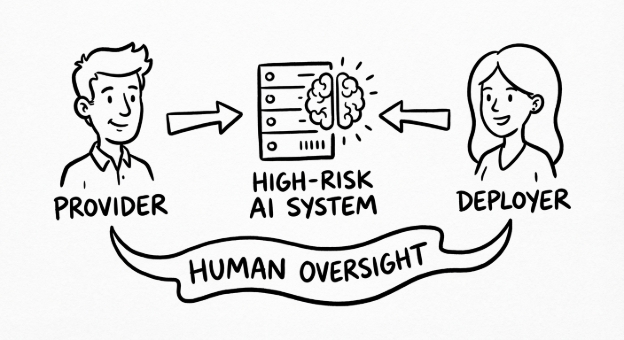

Who Does Article 14 Apply To?

Article 14 primarily applies to high-risk AI systems, which are defined in Annex III of the EU AI Act. Typical examples include:

- Credit scoring and risk assessment systems

- AI used in recruitment, promotion, or access to education

- AI used in critical infrastructure (e.g. energy, transport)

- Biometric identification and categorisation systems

- AI in medical devices and healthcare decision support

- AI used in law enforcement, border control or justice contexts

Two roles are particularly affected:

- Providers: Those who develop or place the AI system on the market

- Deployers: Those who use the AI system in a professional context

Both have specific, non-overlapping obligations under Article 14.

Core Legal Obligation 1: Design for Effective Human Oversight

Article 14(1) requires that high-risk AI systems be designed and developed with human-machine interface tools that allow effective oversight by natural persons during the entire period of use.

In practice, this means:

- Oversight must be possible in real time or at least at the relevant decision points

- Interface elements must make it possible to understand what the system is doing

- The system must support human intervention when needed

This obligation sits directly with the provider: if the system is designed in a way that humans cannot realistically control it, the provider is not compliant.

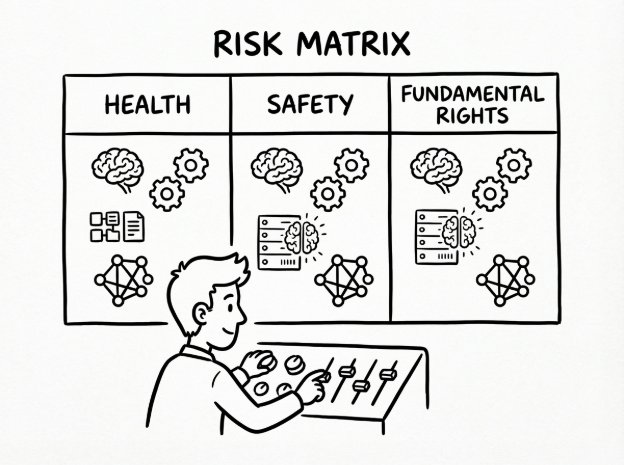

Core Legal Obligation 2: Oversight Must Address Risks to Health, Safety, and Rights

Under Article 14(2), the purpose of human oversight is to prevent or minimise risks to:

- Health

- Safety

- Fundamental rights

This applies even when the system is used:

- According to its intended purpose, or

- Under conditions of reasonably foreseeable misuse

Key point: being technically compliant in other areas (e.g. data quality, robustness) is not enough if human oversight is ineffective. Oversight must actually mitigate residual risks that remain.

Core Legal Obligation 3: Oversight Measures Must Be Proportionate

Article 14(3) states that oversight measures must be commensurate with:

- The system’s risk level

- The level of autonomy

- The context of use

This means:

- More autonomous and impactful systems require stricter oversight

- Low-risk usage scenarios may allow lighter oversight, as long as risk is genuinely lower

Oversight can be ensured via:

- Built-in measures (technical, in the system itself), and/or

- Organisational measures (procedures, policies, workflows for deployers)

Providers must:

- Identify and, where technically feasible, build in oversight features before market placement

- Define which oversight measures must be implemented by deployers (e.g. role assignments, escalation paths, approvals)

Core Legal Obligation 4: What Human Oversight Must Enable in Practice

Article 14(4) lists concrete capabilities that human oversight must enable. These are not optional; they define what “effective” oversight means in legal terms.

1. Understanding System Capabilities and Limitations

Humans assigned oversight must be able to:

- Understand what the system can and cannot do

- Recognise typical failure modes, limitations, and uncertainty

- Monitor the system’s operation to detect anomalies and unexpected performance

This requires:

- Clear documentation

- Training materials

- Accessible explanations in the UI

2. Awareness of Automation Bias

Article 14 explicitly mentions automation bias: the human tendency to over-rely on AI outputs.

Oversight must ensure that humans:

- Are aware that AI outputs can be wrong

- Do not blindly accept recommendations

- Understand the need for independent judgment, especially for sensitive decisions

This often requires:

- Training programs for staff

- UI prompts or warnings reminding users to critically assess outputs

- Clear policies that AI outputs are decision support, not automatic decisions

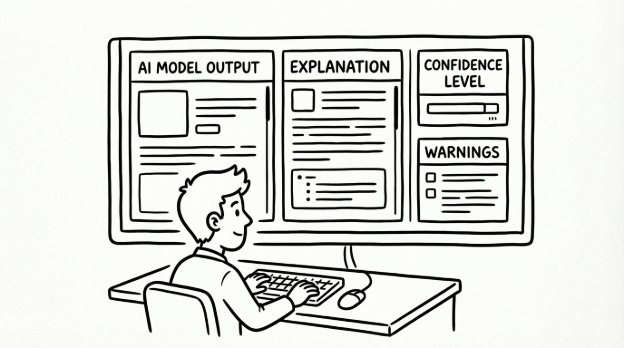

3. Correct Interpretation of Outputs

Humans must be able to interpret outputs correctly, using tools and methods provided. This includes:

- Understanding scores, probabilities, and confidence levels

- Knowing the meaning of alerts and flags

- Interpreting explanations and supporting evidence

If users can’t interpret the outputs, the system fails the Article 14 test, even if the underlying model is sophisticated.

4. Ability to Disregard, Override, or Not Use the System

A core requirement: humans must be able to decide, in any specific situation:

- Not to use the AI system

- To disregard its output

- To override or reverse its recommendations or decisions

Implications for design and policy:

- No “locked-in” workflows where AI outputs cannot be challenged

- Clear escalation paths for overrides

- Documentation of when and why overrides occur (for audit and continuous improvement)

5. Ability to Safely Stop or Interrupt the System

Article 14 also requires that:

- Humans must be able to intervene in the operation of the system

- They must be able to interrupt it via a “stop” button or similar procedure

- The system must come to a safe state when stopped

This is particularly critical for:

- Real-time systems in critical infrastructure

- Systems controlling physical devices or processes

- Large-scale decision automation impacting many individuals

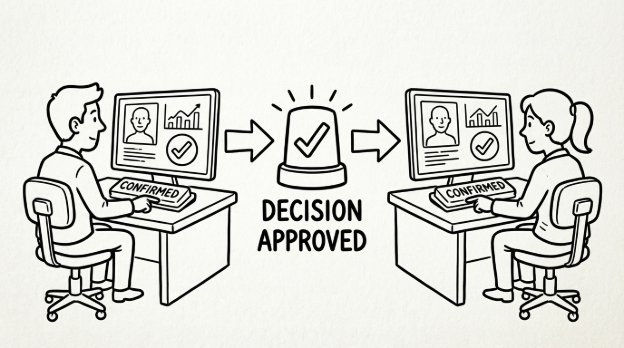

Special Requirement: Double Human Verification for Certain Biometric Systems

Article 14(5) creates an additional, stricter obligation for high-risk AI systems used for biometric identification as listed in Annex III point 1(a).

For these systems:

- No action or decision may be taken based on the AI’s identification result

- Unless that identification is separately verified and confirmed by at least two natural persons

- Those persons must have the necessary competence, training and authority

This is a powerful safeguard against:

- False positives

- Misidentification

- Unchecked reliance on facial recognition or other biometric outputs

There is an exception: this dual verification may be waived for law enforcement, migration, border control or asylum use cases where EU or national law considers it disproportionate. However, the default expectation is two-human confirmation.

Provider Obligations Under Article 14

Providers must ensure that their high-risk AI systems:

- Are designed and developed to enable effective human oversight

- Include appropriate human-machine interface tools

- Explicitly specify which oversight measures are to be implemented by the deployer

- Provide clear documentation and instructions for oversight, training, and risk mitigation

- Enable override, interruption, and safe halt mechanisms

To be compliant, providers typically need to:

- Integrate explainability and monitoring capabilities

- Document limitations and foreseeable misuse scenarios

- Deliver user manuals, training guidance, and governance recommendations

- Implement role-based access controls and logging

Deployer Obligations Under Article 14

Deployers (organisations using the high-risk AI system) must:

- Assign human oversight responsibilities to competent, trained individuals

- Ensure those individuals understand the system and its limits

- Put in place procedures for overrides, escalation and emergency stops

- Monitor the AI system in daily operation and detect anomalies

- For biometric systems, enforce two-person verification where applicable

Operationally, that means:

- Updating internal policies and SOPs to embed human oversight

- Training decision-makers and operators

- Defining thresholds for manual review and intervention

- Keeping records of interventions, overrides and incidents

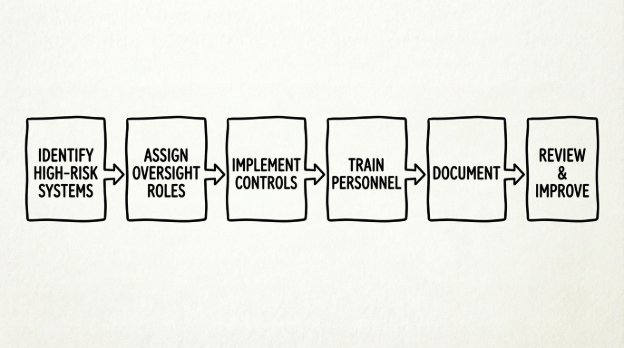

Practical Steps to Achieve Article 14 Compliance

Organisations looking to comply can follow a practical roadmap:

- Map Use Cases and Risk Levels

Identify which AI systems are high-risk and understand their autonomy and impact. - Define Human Oversight Roles

Specify which roles (e.g. case worker, risk analyst, supervisor) are responsible for oversight. - Implement Oversight Controls

- Add clear dashboards, alerts, and logging

- Add override and stop mechanisms

- Define manual review checkpoints

- Train Oversight Personnel

Train them on system capabilities, limitations, and automation bias. - Document Everything

- Oversight procedures

- Training materials

- Incident and override logs

- Review and Improve Continuously

Use evidence from overrides, incidents and user feedback to refine oversight mechanisms over time.

Why Article 14 Is a Strategic Opportunity, Not Just a Compliance Burden

While Article 14 is a legal obligation, it also creates strategic benefits:

- Reduced operational risk: Human oversight catches issues models miss.

- Improved trust: Customers, regulators, and partners gain confidence when humans remain in control.

- Better model performance: Human feedback and overrides provide rich data to improve models over time.

- Future-proofing: A strong oversight framework today makes it easier to adapt to future regulations.

Organisations that treat Article 14 as governance infrastructure rather than a checkbox exercise will be better positioned to scale AI safely and responsibly.

Conclusion

Article 14 of the EU AI Act reshapes how high-risk AI systems must be designed, governed, and operated. It requires:

- Built-in human oversight capabilities

- Clear, proportionate controls aligned with risk and autonomy

- Trained human overseers with authority to override and stop the system

- Special double-verification for certain biometric systems

For providers and deployers, compliance is not optional. But when implemented thoughtfully, Article 14 transforms oversight from a regulatory headache into a competitive advantage: delivering safer, more trustworthy, and more accountable AI.